How we broke the visibility depth - cost tradeoff

The three pillars of observability are a well known concept we’ve been taught to follow. You need to have your logs, metrics and traces in place in order to be a happy and efficient engineer. Although amazing in theory, reality is far more complex. As you start to scale out your services and grow, you eventually find yourself trucking and storing huge volumes of data coming from each of these pillars.

This is not the monitoring experience you were promised. Suddenly there is a real life constraint. Someone has to pay for all this data so you can use it to troubleshoot, one day, when you actually need it.

Tale as old as time (about application monitoring costs)

Almost every engineering team can tell the following tale. There is a bug in production that took a lot of time to investigate and figure out. As quality bugs are, you missed it during the CI stage and it fell between the cracks of your monitoring pillars. Your existing logs were insufficient to shed light over the problem and no metric indicated an issue was emerging. After spending hours on troubleshooting this incident, you take the obvious step of covering this gap from now on, by adding logs and custom metrics that better capture the behavior that could indicate a problem. Your goal: to never have to go through this painful process again.

A week later, someone from management starts noticing the expected monitoring bill for this month is much higher than usual. Deep down you know what happened. That log line you added created a much higher volume than you had anticipated, or that new metric is of much higher cardinality than you planned.

It happened - that frustrating trade-off showed its face. Now you need to decide - should you pay for that extra coverage (even though you don’t need it 99% of the time) or should you remove it to save costs?

The way application monitoring solutions are built forces a cost trade-off

The monitoring solutions that dominate today’s market were built when the world was very different. Engineering teams were mostly working on single-tiered monolith applications that combined user-facing interfaces and data access into a single platform.

Application monitoring platforms harnessed code instrumentation to govern ingoing and outgoing communication to the platform. To incur as little overhead as possible over the application, their design was simple collectors running alongside the application, sending the data to the provider’s backend where most data crunching can take place.

This design allowed maximum flexibility for the user. You could access all the data that was collected by the platform, in one central place, where you can query and explore it. From an engineering perspective, centralized architecture made perfect sense. Monolith applications created simple communication patterns, mostly dominated by the user generated traffic entering the platform. This ensured the data volumes moving from the application to the provider were reasonable, or at least proportional to the volume of user traffic.

Today’s micro-services architectures are built exactly the opposite. Suddenly each user facing traffic triggers numerous API calls between various micro-services. Data volumes rose fast, and companies were left to pick up the bill (which, on average, is estimated as 15% of cloud costs these days).

Yet, studies show that less than 1% of this data is useful and ever explored by users. The other 99% is collected, stored, processed but never used. Paying for the 99% you care nothing about so you can reach the 1% you do - is at the heart of the visibility depth - cost trade-off.

A small change can cause a big difference

One of the goals we set for ourselves here at groundcover was to break the visibility depth - cost trade-off, and save engineers the pain of choosing between responsible budgets and data depth.

To tackle this, we built groundcover the other way around. Distributed rather than centralized. Moving the decision power close to each node in the cluster allows us to filter the data we don’t care about or reduce the volume of the part we do before sending it anywhere. This means you don’t have to save all the data so you can access it. Instead you save only what has already been categorized as valuable. This simple fact breaks the equation. You can delve deep in visibility without paying the price.

To pull this off and to minimize the cost-visibility trade-off, we use a two-fold approach to the data we observe:

1. Turning data into metrics where the data resides

Metrics are an amazing tool for troubleshooting and performance monitoring. It is the most common data reduction operation of the software monitoring world. It forces us as developers to distill the complex context of our running application into a few numbers and labels that can be relevant to describe its state.

The biggest problem with metrics is that you have to craft the metric you want in advance and make sure it's collected and reported. groundcover reduces the data into high-granularity metrics right where the data resides.

So you get many metrics covering monitoring best practices right out-of-the-box, but also reduce high volumes of data intro metrics without the data being moved and stored.

2. Capture only what you care about

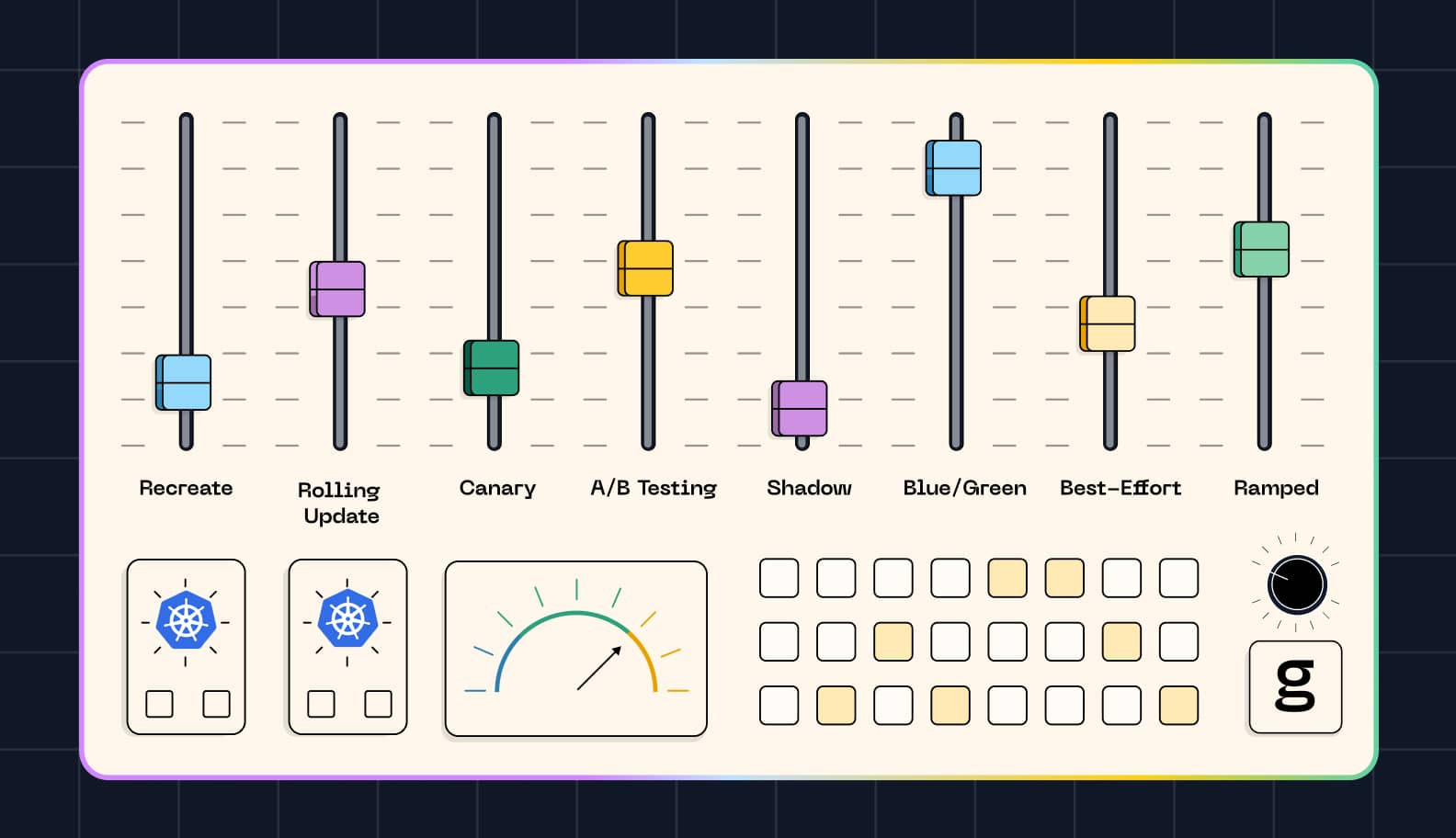

To control performance and cost caused by the immense data volumes sent to the monitoring provider, most monitoring tools offer the concept of ‘random sampling’. Basically, they offer teams an intuitive knob to control the volume of data being digested for the purpose of monitoring.

So your cluster is facing 1M req/s. According to APMs, just sample 1 in 100 requests and take this number down to 10K req/s. You get to save performance and cost, but supposedly still get a fair coverage of things.

But there’s a clear downside to that. What happens if only 1 request of the 1M per second is interesting? You’ll end up sampling and storing 10K requests that are probably all healthy and uninteresting. Moreover, you’re not even guaranteed to capture the one request you really care about. groundcover transforms random sampling into what we call smart capturing.

During the passage of every request through our agent, it will take decisions on what incident to store. It means you can store that 1 out of 1M requests, without all the irrelevant data that you didn’t ask for. Did someone say a needle in a stack of needles?

Sign up for Updates

Keep up with all things cloud-native observability.