.jpg)

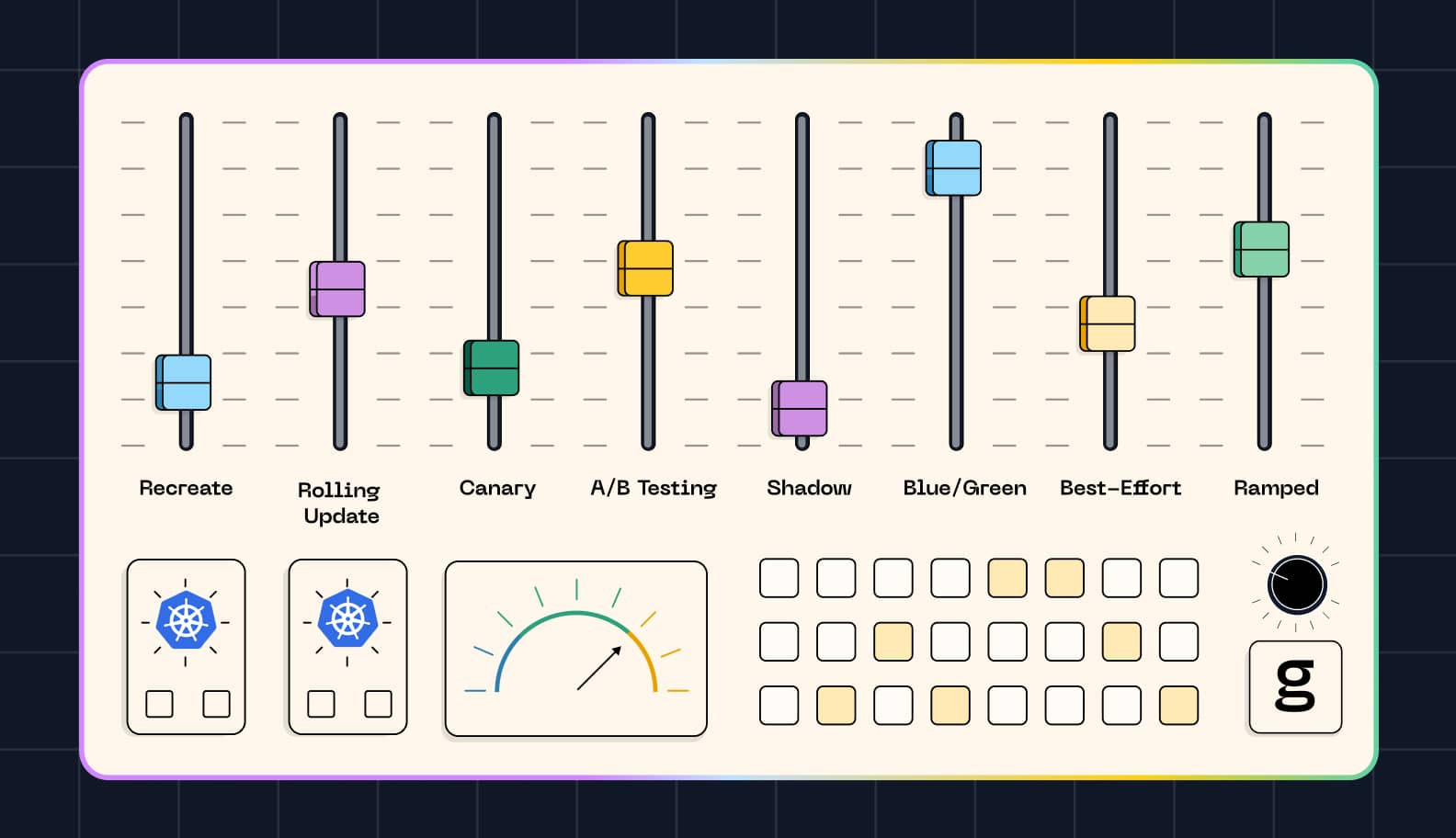

One of Kubernetes's killer features is that it offers a flexible way to deploy applications. Admins can choose from a variety of deployment strategies, each of which offers a different approach to application lifecycle management. Depending on factors like application availability requirements or how carefully you want to be able to test a new deployment before entrusting mission-critical workloads to it, one Kubernetes deployment strategy may be a better fit than another.

To provide guidance on how to select the best deployment strategy for a given workload, this article compares eight popular Kubernetes deployment techniques, explaining their pros and cons. It also offers tips on optimizing your Kubernetes deployment strategy no matter which type or types of deployments you choose.

What is a Kubernetes deployment strategy?

A Kubernetes deployment strategy is the configuration that manages how Kubernetes runs and manages an application. Deployment strategies are typically defined in YAML files, which describe how Kubernetes should deploy the initial pods and containers associated with an app, as well as how it should manage updates over the course of the application’s lifecycle.

Having different deployment strategy options is part of what makes Kubernetes so powerful and flexible. Depending on what an application does, you may need to manage its deployment in a specific way.

For example, with some applications, it’s possible to run multiple versions of the app at the same time within the same Kubernetes cluster. In that case, you could use a deployment strategy that updates application instances one by one. But this typically wouldn’t work if all application instances need to connect to a shared database or maintain a shared global state. An appropriate deployment strategy for that scenario would require updating all application instances at the same time, in order to maintain consistency between versions.

Top 8 Kubernetes deployment strategies

To illustrate what Kubernetes deployment strategies look like in practice, here are eight examples of popular deployment patterns.

1. Recreate deployment

A recreate deployment tells Kubernetes to delete all existing instances of a pod before creating a new one. Recreate deployment strategies are useful for situations where you need all application instances to run the same version at all times.

To configure a recreate deployment, include a spec like the following in your deployment configuration:

This creates a deployment with three pod replicas and uses the recreate deployment strategy to maintain a consistent version across each replica.

2. Rolling deployment

A rolling deployment (which is the default deployment strategy that Kubernetes uses if you don’t specify an alternative) manages pod updates by applying them incrementally to each pod instance. In other words, it works by restarting Kubernetes pods one by one.

Rolling updates are a useful deployment strategy when it’s important to avoid downtime. Since this approach updates pod instances incrementally, it ensures that while one pod instance is being updated, other instances remain available to handle requests.

The following spec configures a rolling deployment strategy:

3. Blue/green deployment

In a blue/green deployment strategy, you maintain two distinct Kubernetes deployments – a blue deployment and a green one – and switch traffic between them. The advantage of this approach is that it allows you to test one version of your deployment and confirm that it works properly before directing traffic to it.

To implement a blue/green deployment, first create two Kubernetes deployments. Use the deployment metadata field to apply a unique label to each one.

Then, define a Kubernetes service that specifies which of the two Kubernetes deployments should receive requests. For example, the following service sends traffic to the blue deployment by matching the label “blue”:

As noted in the comment within the service definition, you can modify the selector to “green” in order to switch traffic to your other deployment.

Blue/green deployments minimize the risk of downtime because they allow you to vet a new deployment fully before using it to handle production traffic. A downside, however, is that blue/green deployments require you to run two complete instances of your application at the same time. This is not an efficient use of resources, since only one of the instances is handling traffic.

4. Canary deployment

A canary deployment strategy switches traffic between distinct deployments gradually. It’s similar to a blue/green strategy in that it requires two different deployments. But whereas a blue/green deployment cuts traffic over from one deployment to the other all at once, the canary method directs some requests to one deployment while sending others to the other deployment.

The advantage of this approach is that it allows you to detect problems with one of the deployments before they impact all users. It’s called a “canary” deployment because it’s analogous to using canaries in coal mines to detect the buildup of toxic gases before they reach a level that would harm humans, since canaries are especially sensitive to gases like carbon monoxide.

To set up a canary deployment, first create two deployments for your application. The number of pod replicas for each deployment should reflect which percentage of traffic you want the deployment to handle. For instance, if you want one deployment to receive 60 percent of your traffic and the other to receive 40 percent, create 6 replicas in the first deployment and 4 in the second. Both deployments should match the same application label.

Then, create a service that directs traffic to the matching application based on the deployment metadata. For example:

To modify the balance between traffic over time in order to switch traffic gradually from one deployment to the other, scale the replicas within each deployment accordingly using the kubectl scale deployment command.

5. A/B testing deployment

In an A/B testing deployment, you run two distinct deployments and route traffic between them based on request type or user characteristics.

For example, imagine you want to distinguish between requests from “testing” users and requests from “production” users. You could do this by differentiating between user types in request headers and routing requests on this basis. Requests with the header end-user: testing would go to one deployment, while those with end-user: production would route to another.

To implement an A/B testing deployment strategy, first create two deployments. Then, install a service mesh or ingress controller, such as Istio, and configure it with a routing rule that selects a deployment based on header strings.

For instance, the following Istio virtual service (which targets the app my-app based on the deployment metadata) sends requests with testing in the header to one deployment, while routing all others to the other deployment:

6. Shadow deployment

A shadow deployment strategy involves running two deployments. One deployment handles all requests and routes responses back to users. Meanwhile, the second deployment runs “in the shadows” and also processes some or all requests, but the responses don’t go back to users. Instead, they’re analyzed for testing purposes and then dropped.

The value of a shadow deployment is that it lets you test a deployment by feeding it genuine user requests without relying on it to process those requests for production users. If the deployment is buggy, it won’t impact users, but you’ll still be able to detect the issue.

To create a shadow deployment in Kubernetes, first set up two deployments for your application. Next, create a virtual service that mirrors traffic to the shadow instance, while also sending traffic to the primary instance. For example:

7. Best-effort controlled rollout

A best-effort controlled rollout is similar to a canary deployment in that it gradually switches traffic from one deployment to another. But it does so in a more controlled manner, often by using predefined conditions (like crossing a certain CPU usage threshold for one deployment) to determine when to change the amount of traffic going to each deployment.

To implement a best-effort controlled rollout, you would typically use a third-party deployment controller like Argo and configure it to manage your rollout based on predefined criteria. For example:

This configures a type of canary deployment. But unlike a generic canary deployment strategy where you simply add replicas to each deployment over time, the rollout in this case is carefully controlled based on specific criteria.

8. Ramped slow rollout

A ramped slow rollout replaces pod instances incrementally, with delays between the replacements. It’s similar to a rolling deployment, but a key difference is that with a ramped slow deployment, there is a pause between each update. This provides time to run tests and confirm that the previous update was successful before moving on to the next one.

To implement a ramped slow rollout, use a controller like Argo and configure pauses between each rollout event. For instance:

Choosing the right deployment strategy by use case

The main consideration for deciding which deployment strategy to adopt is the type of use case you need to support. Here’s a look at common use cases and the best deployment strategies for each one.

Stateless applications

For stateless applications, a simple rolling deployment usually makes the most sense. If there is no application, it’s not typically important to keep pod instances in sync, so you can update them one by one without causing problems.

Stateful applications

For most stateful applications, a recreate deployment strategy works best. Recreate deployments ensure that application versions remain consistent across all instances, helping to keep state in sync.

Note as well that typically, you’d use a StatefulSet instead of a deployment to run a stateful application. (For details, check out our article on Kubernetes StatefulSet vs deployment.) But you can apply most types of deployment strategies to StatefulSets as well as to deployments.

High-traffic applications

For applications that receive a lot of traffic on a continuous basis, a canary deployment or one of its variants (like a best-effort controlled rollout or a ramped slow rollout) is usually the best fit. These methods make it possible to route traffic between multiple deployments, which in turn helps to balance load and ensure that no one deployment becomes overwhelmed.

Mission-critical and zero-downtime apps

For use cases where you can’t tolerate any downtime, consider a blue/green deployment. This approach allows you to validate a new deployment fully before sending traffic to it.

A shadow deployment could also be a good choice for this use case. It would allow you to real-user perform testing on a new deployment before directing requests to it.

A/B testing deployment strategies may also work well for certain mission-critical apps, especially if only certain users or requests are critical. In that case, you can send the high-value requests to one deployment that you’ve carefully tested, while routing less critical ones to another deployment.

Batch processing and background jobs

For use cases that involve processing data in batches or running background jobs, a simple recreate or rollout deployment strategy typically works well. More complex and advanced deployment strategies aren’t usually necessary for these use cases because you usually don’t need to worry as much about the potential for downtime or running multiple versions of an application at the same time.

Factors to consider when selecting a deployment strategy

Beyond aligning deployment strategies with use cases, it’s also important to consider the following factors:

- Deployment downtime tolerance: The less downtime you can accept for a workload, the more important it is to use a low-risk deployment strategy, like rolling or blue/green Kubernetes deployments.

- Traffic flow and load management: If you need fine-grained control over traffic flow and load balancing, consider an A/B testing or controlled rollout deployment, which allows you to route traffic based on predefined rules.

- Failover, rollback, and reversal mechanisms: If it’s important to be able to revert to a previous version of a deployment, use either a blue/green or canary deployment method. These approaches make it possible to switch back to one deployment in the event that a newer deployment turns out to be buggy.

- Security and compliance considerations: Some Kubernetes deployments are subject to specific security and compliance requirements. For instance, if you need to ensure that security patches roll out to all application instances (to avoid leaving some vulnerable instances), you’d want a recreate deployment method. Or, if you need to route requests for users based in a certain area to a specific deployment to meet compliance rules that apply to those users, you could use an A/B testing deployment strategy.

- Scalability and auto-healing capabilities: Canary deployments are useful because they provide control over deployment scalability. They can also offer some auto-healing capabilities because Kubernetes will automatically attempt to maintain the number of replicas specified for each deployment – so if some replicas fail, Kubernetes can self-heal by restoring them.

Best practices for a seamless Kubernetes deployment

No matter which deployment strategy you choose, the following best practices can help minimize risk and simplify administration:

- Prefer simplicity: Some deployment strategies (like recreate and rolling Kubernetes deployments) are much simpler than others (like best-effort controlled rollouts). In general, simpler is better. Don’t implement a complex deployment strategy, or one that requires the use of additional tools (like Istio or Argo) unless you need the special capabilities it provides.

- Test deployments: Prior to entrusting production traffic to a deployment, it’s a best practice to test it first. You can do this using synthetic requests, or you can direct real user requests to a deployment via a method like shadow deployments.

- Monitor and observe Kubernetes deployments: To detect issues with a deployment, it’s critical to monitor and observe all application instances. Methods like blue/green and canary deployments are only useful if you have the monitoring and observability data necessary to detect problems with one deployment and route traffic appropriately.

- Consider resource overhead: Deployment strategies that require you to maintain multiple Kubernetes deployments at the same time create more resource overhead (because more deployments require more resources to run). This can lead to poorer performance because Kubernetes cluster resources are tied up with redundant deployments. For this reason, it’s important to evaluate how many spare resources your Kubernetes cluster has and choose a deployment method accordingly.

Optimizing and monitoring Kubernetes deployments with groundcover

When it comes to monitoring Kubernetes deployments and troubleshooting problems, groundcover as you covered. Using hyper-efficient eBPF-based observability, groundcover clues you in - in real time - to deployment performance issues like dropped requests or high latency rates. We also continuously track CPU, memory, and other performance metrics, so you’ll know right away if any of your Kubernetes deployments are at risk of becoming overwhelmed.

We can’t tell you exactly which deployment strategy is best for a given workload. But we can give you the observability data you need to make an informed decision about Kubernetes deployment strategies.

A balanced approach to Kubernetes deployment

Ultimately, Kubernetes deployment strategies boil down to balancing performance and control on the one hand with risk and complexity on the other. If you just want to deploy an application simply and quickly, Kubernetes lets you do that – although simple deployment methods are sometimes more risky.

You can also opt for more complex and fine-tuned deployment strategies that – like a complex chess move – require more expertise to carry out, but that can pay off in the long run by delivering a better balance between risk and performance.

Sign up for Updates

Keep up with all things cloud-native observability.

We care about data. Check out our privacy policy.

.svg)