What are the Four Golden Signals and Why Do They Matter?

Discover why the 4 Golden Signals are considered such an effective method for understanding information coming out of modern cloud applications and services and how to best utilize them using observability platforms.

There's an old saying that "silence is golden." It means that, in general, no noise is good noise.

Unfortunately, most software systems are rarely silent. They can spit out hundreds of different metrics, log files, and traces – which translates to a lot of noise for Site Reliability Engineering (SRE) teams tasked with monitoring and observing the systems.

That's why observability teams need to live according to another "golden" standard – the Four Golden Signals. As this article explains, the Four Golden Signals help make sense of disparate, complex troves of observability data, allowing SRE teams to assess application performance and system health effectively and efficiently. They're a valuable methodology for Kubernetes monitoring and observing other complex, distributed systems.

What is Site Reliability Engineering?

To explain what the Four Golden Signals mean and why they're important to modern Site Reliability Engineering teams, let's first define this concept.

SRE is an IT discipline focused on managing and optimizing the performance and reliability of software systems. The main idea behind SRE is to apply the principles of software engineering – such as code-based configuration – to application performance monitoring and observability.

People who perform SRE work are called (as you might guess) Site Reliability Engineers, or SREs.

The SRE concept originated at Google in the early 2000s. For a long time, only very large tech companies had SRE roles. But over the past decade or so, as software performance has become a paramount concern for companies of all types, SRE has become commonplace at organizations large and small. Today, virtually any business that has to manage software systems or guarantee certain levels of performance can benefit from SRE.

What are the Four Golden Signals?

Now, let's discuss what the Four Golden Signals mean, and why they're so important to SREs.

The Four Golden Signals are four types of observability insights that, when put together, provide a holistic understanding of the overall health and performance of complex systems. They're not specific metrics or log types; instead, they're types of information that you can measure.

There are four types of signals that this methodology focuses on: Latency, traffic, errors, and saturation.

Latency

Latency measures the interval between when a request is sent and when its response is received. For instance, if there is a delay of 100 milliseconds between when a client requests information and when the request is filled, the request has a latency of 100 milliseconds.

Poor latency rates can be a result of network performance issues. They can also stem from inefficient or buggy code within applications that delay processing.

Traffic

Traffic is the total number of successful requests that an application or service is handling. It's a reflection of the overall load placed on the system.

On its own, traffic doesn't tell you much about how well an application is performing because high or low rates of traffic are not necessarily an issue. However, by correlating traffic with other data, you can track how changes in traffic impact application performance. For instance, if your app has a low latency rate when traffic is low, but latency spikes during upticks in traffic, then you know your app is struggling to perform efficiently when traffic volumes grow.

Errors

Errors measure the number of requests that either fail or generate an unexpected response. Errors include not just error messages, but also incomplete or corrupt responses.

Obviously, you ideally want your application to produce no errors. However some level of error is inevitable, and tracing how error rates change alongside other signals is important for gaining insight into overall application health.

Saturation

Saturation tells you how many resources your application is consuming relative to the total resources available. For instance, if the app is consuming 80 percent of the total RAM available, its memory saturation rate is 80 percent.

Knowing your saturation levels helps you determine whether a lack of available resources explains other issues, like high latency or error rates. In addition, monitoring saturation allows you to be proactive about allocating more resources to applications that are approaching their saturation limits.

How the 4 Golden Signals Impact SRE

The Four Golden Signals go hand-in-hand with SRE not only because they can help SREs make sense of complex sets of observability data, but also because Google explicitly promoted the Four Golden Signals concept in a book about SRE that the company published in 2014.

Thus, while there are a variety of monitoring and performance management strategies that can benefit SRE teams – such as the RED Method, another popular framework – you'll typically hear SREs talk about the Four Golden Signals as the foundation of their approach to performance management, due to the close historical relationship between the Four Golden Signals concept and SRE work.

Why Measure the Golden Signals?

The main reason to track the 4 Golden Signals is that they provide a holistic way of interpreting disparate types of logs, metrics, and traces within distributed systems by distilling the data into four basic categories. Thus, rather than trying to sort through dozens or hundreds of different data points, SREs can consolidate their data into four key signals that provide meaningful insight into application health and performance.

More specifically, measuring the 4 Golden Signals allows teams to achieve the following benefits.

Improved System Reliability

By monitoring signals like saturation rate and error rate, SREs can proactively identify and respond to risks before they lead to crashes. This increases overall reliability and uptime rates.

For example, if you notice an uptick in error messages relative to the normal volume of errors, that's a sign that something is wrong. By investigating the issue before errors reach a critical volume, you maximize your chances of resolving the problem before it affects a substantial number of users.

Root Cause Analysis

Measuring the 4 Golden Signals together allows SREs to correlate different types of insights and identify root causes.

For instance, if latency increases at the same time that saturation rates approach 100 percent, you can reasonably conclude that a lack of available resources is the root cause of high latency. This would allow you to rule out other potential causes, like networking issues, so that you can home in on the source of the problem and fix it faster.

Optimized Performance

Measuring resource utilization rates alongside other signals helps SREs optimize overall system performance by determining how many resources to allocate to applications and services.

For example, if saturation rates are low while other performance indicators remain normal, it's a sign that your application may not need as many resources as you've assigned to it. You can then reallocate resources to other applications. This leads to an optimal balance between resource usage and performance.

Informed Decision Making

Along similar lines, the Golden Signals can help SREs with tasks like capacity planning and setting priorities. Knowing how performance correlates with resource utilization rates allows SREs to make informed decisions about how many resources to allocate.

Plus, in cases where there simply aren't enough resources to go around, SREs can choose which services or outcomes to prioritize. They might decide that accepting a higher latency rate is worth it if it keeps the volume of errors in check, for example.

Enhanced User Experience

Ultimately, measuring the 4 Golden Signals helps teams optimize the end user experience. Three of the signals – latency, traffic, and errors – are a direct measure of how an application performs from the user's perspective. Saturation rates are more of a backend insight, but the ability to correlate it with the other signals helps SREs make decisions that optimize the overall user experience.

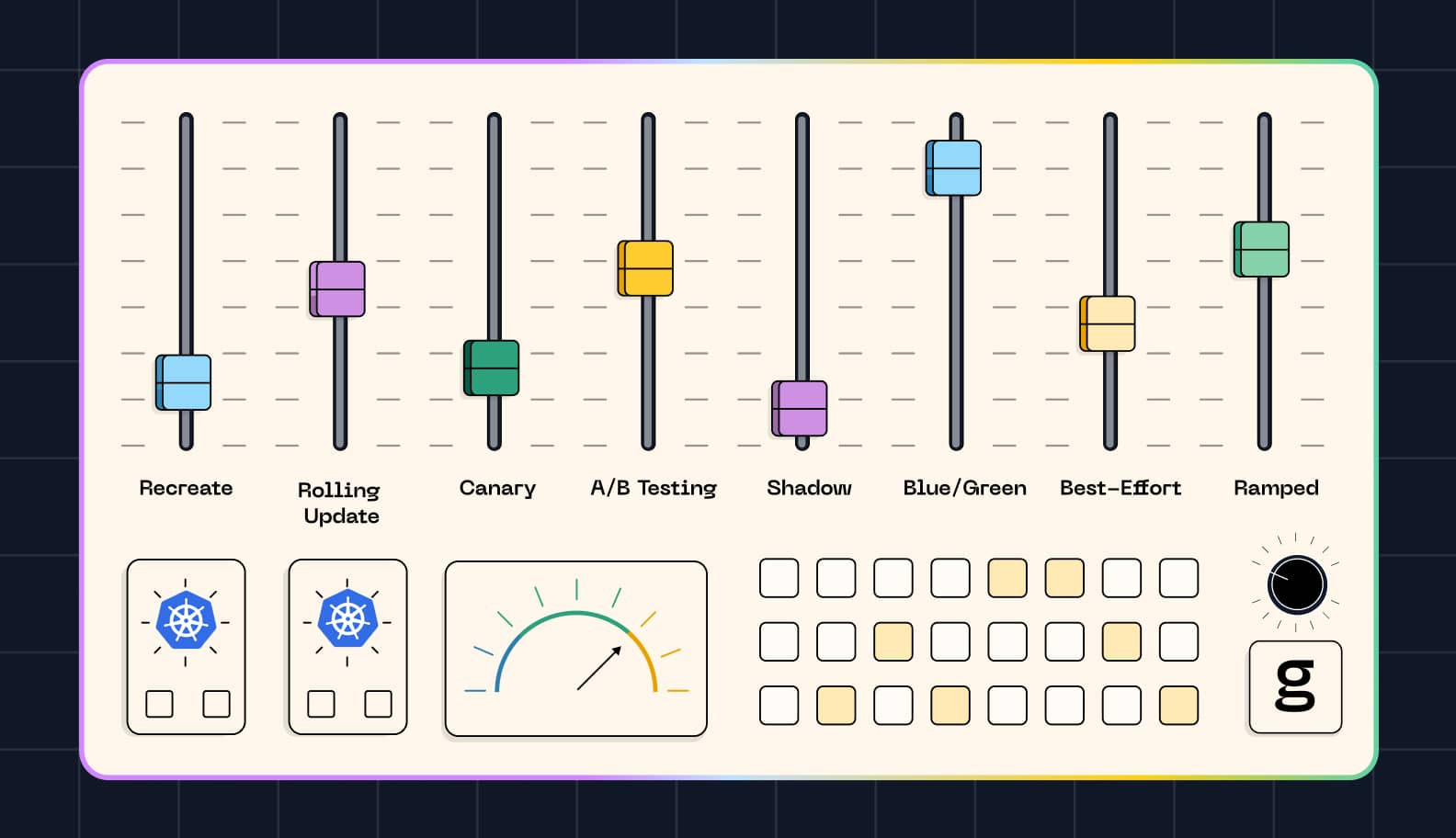

Golden Signals vs RED vs USE Methods

As we mentioned, the Golden Signals aren't the only framework available for interpreting system health. Other popular strategies include:

- The RED Method, which focuses on rate (meaning the number of requests per second), error volume, and request duration.

- The USE method, which tracks utilization, saturation, and errors.

There is a fair amount of overlap between the Golden Signals and these other methods because they focus largely on the same types of data. However, the Golden Signals are the most comprehensive monitoring method because they track four key types of performance indicators. The RED Method leaves out saturation, while USE doesn't track any signal equivalent to latency. (In some ways, the utilization signal in the USE measures latency, but it's not as complete or direct a measure of this signal as you get from the Golden Signals.)

You could argue that RED and USE are preferable to the Golden Signals because they are simpler. But there's also a good case to be made that the Golden Signals is the best monitoring method because it focuses on the broadest set of information, which means it maximizes SREs' ability to correlate data.

Getting Started with the Golden Signals

Because the Golden Signals are categories of information rather than specific types of data, you can't simply tell your observability tools to start monitoring each signal for you. Instead, you need to determine which data sources are available, and then collect and analyze them in ways that align with the Golden Signals method.

For instance, a real-world observability app that is configured based on the Golden Signals concept might use the following types of data sources to provide each signal:

- Using log files or traces, it can measure the latency between when an application receives a request and when the response is sent back to the client.

- It can collect metrics from the application that track the total requests received per second, which measures traffic rates.

- It can access logs or error metrics to track error rates.

- Using key metrics generated by the application or agents that run on the host system, it can track CPU and memory usage to measure saturation.

In case it's not clear, the specific types of data that align with the Golden Signals will vary from one application or system to another. Some applications and systems produce a lot of key metrics that are helpful for tracking the four signals. For example, there is a fairly wide variety of Kubernetes metrics that you can collect if you use Kubernetes to deploy your apps. But in other cases, you might have to rely primarily on log files, traces, or monitoring agents that are external to the application to collect the data you need.

Considerations for Monitoring

An effective monitoring strategy based on the Golden Signals should address the following:

- Data collection: As we mentioned, you need to know which types of data to collect and which mechanisms (like monitoring agents) are available to collect them.

- Alerting mechanisms: In addition to collecting data, you should set up alerts so that you'll know when something is awry with one of the signals – such as a sudden spike in latency or error rates relative to the baseline.

- Visualization tools: The ability to visualize the signals can help to interpret data and find trends within complex data sets – especially through visualizations that correlate multiple signals together.

Choosing the Right Monitoring Tools and Techniques

When it comes to measuring the Golden Signals, there are multiple types of tools that can help:

- Infrastructure Monitoring Tools: These are useful for tracing saturation rates by measuring data like total CPU and memory consumption.

- Application Performance Management (APM) Tools: APM software can collect a variety of metrics, log data, and traces to measure latency and traffic.

- Synthetic Monitoring Tools: Synthetic monitoring lets you issue simulated requests or traces and track the application's response. This type of tool is useful in situations where organic logs, metrics, and traces aren't enough on their own to measure the Golden Signals.

These are overlapping categories, and some observability and monitoring tools offer more than one type of monitoring feature – so you won't necessarily need multiple tools. However you will want to make sure the tools you use provide all of the capabilities necessary to align your monitoring strategy with the Golden Signals method.

How to Detect Anomalies in Your System

Detecting anomalies based on the Golden Signals is easy enough when your application doesn't change often. For example, if traffic rates are constant across different times of day and days of the week, you'll know that a sudden spike in traffic is an anomaly.

But what if your application is constantly changing? What if there is no "normal" baseline against which you can measure anomalies? In that case, anomaly detection hinges on your ability to correlate signals such that you'll know whether a sudden change is actually an anomaly or is a normal part of application behavior.

For example, imagine that traffic and saturation rates increase at the same time. This is expected because more traffic will naturally increase the load placed on your app, leading to higher rates of CPU and memory usage.

But if saturation increases without a corresponding uptick in traffic, that would likely be an anomalous event that you should investigate. It could result from a bug in your application code that creates a memory leak, or zombie containers that have failed Kubernetes health checks but that the system can't kill for some reason, leaving them to suck up CPU.

Beyond the Golden Signals

The Golden Signals are a great foundation for effectively observing complex systems. But if you want to go above and beyond in your SRE initiatives, consider the following additional practices.

Chaos Engineering

Chaos engineering means deliberately introducing error or performance degradation events into a system, and then evaluating how it responds. For example, you could send malformed requests to see if they increase application error rate, or flood a Web service with high volumes of traffic to check how it impacts latency.

The purpose of chaos engineering is to help identify risks you may not have thought about by simulating problems that might occur in the real world.

Gameday

Gameday is a structured chaos engineering experiment. It involves running chaos engineering tests for a set period of time – such as a day – then evaluating the results and applying the insights to standard operations.

So, you can think of Gameday as a way of implementing chaos engineering, whereas chaos engineering itself is a high-level practice that doesn't include specific implementation guidance.

Synthetic User Monitoring

Again, synthetic user monitoring is a monitoring technique that involves sending simulated (or "synthetic") requests to a system to evaluate how it responds. Unlike in the case of chaos engineering, the synthetic requests aren't designed to cause a failure. Instead, they trigger application behavior that can be measured to assess overall performance.

Synthetic monitoring may not be necessary if your app generates a lot of metrics and logs on its own. But it's helpful in cases where an app doesn't receive enough organic traffic to measure all of the signals effectively, or where you want to monitor specific types of requests that aren't being made by real-world users.

Measuring the Golden Signals with groundcover

If you've decided that the Golden Signals are the monitoring methodology that best floats your boat, groundcover has you covered. Our unique approach to observability uses eBPF, a low-level Linux monitoring framework, to collect data from across all applications and services – so no matter which logs, metrics, and traces your app produces itself, you can get the insights you need to support the Golden Signals method using groundcover.

From there, you can drill down into the data, identify root causes and remediate issues using a variety of data analysis and visualization features.

Going for Observability Gold

You don't have to use the Golden Signals to observe complex systems. But they're arguably the most comprehensive and effective method for making sense of the vast sets of information produced by modern applications and services, identifying performance risks, and optimizing the end-user experience. And with a tool like groundcover on your side, getting and analyzing the data you need to measure the Golden Signals has never been easier.

Sign up for Updates

Keep up with all things cloud-native observability.