Kubernetes Sidecar Containers: Use Cases and Best Practices

Explore essential steps for configuring sidecars and best practices for monitoring to ensure sidecar containers don't hinder application deployment.

.png)

Containers have at least one thing in common with the Beatles - sometimes they also need a little help from their friends to get by. Those friends come in the form of sidecar containers, which serve as helpers that handle tasks like logging or configuration management.

When should you use sidecar containers in Kubernetes, and how can you make the most of them? Keep reading for answers to these questions as we unpack everything you need to know about Kubernetes sidecar containers.

What is a Kubernetes sidecar container?

In Kubernetes, a sidecar container is a container that runs alongside the container that hosts an application itself. Both the sidecar and the main container run in the same Pod. You can have one or multiple containers that operate as sidecars alongside a main container.

As we mentioned above, the purpose of sidecar containers is to assist the primary application container in some way. For example, sidecars can help with managing logs and metrics, which is part of what makes sidecar containers important in the context of Kubernetes logging.

Sidecar vs. init container

It's important not to confuse sidecar containers with init containers. The latter are containers that run before an app's main container starts. They handle startup tasks, such as preparing the Pod environment for the main container. However, they stop operating before the main container starts.

Thus, while init and sidecar containers can both assist primary containers in some way, the key difference between them is that sidecars run at the same time as the main container, not beforehand.

When to use sidecar containers

Not every application deployment requires sidecar containers – and because sidecars increase the resource overhead and complexity of your deployment, you should avoid running sidecars when they're not necessary.

Typically, it makes sense to leverage sidecars under the following circumstances:

For sharing networks and storage

Because sidecars run inside the same Pod as a primary application container, they have access to the same network and storage resources. This makes sidecars handy in situations where you need to perform certain tasks related to networking and storage, such as enforcing network security rules or restricting access to storage resources. With a sidecar, you can handle these tasks separately from the main application.

For application logging

As noted above, sidecars are a great way to handle application logs. They can ingest log data from the main container, then transfer it to a log management or observability tool. That way, you don't have to build logic for log management directly into your main app. You can also more easily change your logging agents when you run them as sidecars because you can switch things up without having to modify your application itself.

For keeping application configuration up to date

Sidecar containers can host application configuration data, which the main container can read when starting up. This approach allows you to separate configurations from the application itself, making it easier to update the configuration without having to modify the actual application.

Sidecar containers use cases

Most sidecar container deployments target the following types of use cases and goals:

Data replication and synchronization

Because sidecar containers can store and manage data separately from the application they support, they are a good solution for replicating data and keeping it in sync with other resources. You can push data from the main container to a sidecar, then let the sidecar do the work of replicating or syncing data.

Load balancing and service discovery

Sidecar containers can handle network-related tasks like load balancing and service discovery. By outsourcing these tasks to a sidecar, you free your application itself from having to implement the requisite logic, and you make it easier to update or modify load balancing and service discovery settings as your network configuration changes. In this context, sidecar containers can serve as an Istio alternative because they allow you to handle work that would otherwise require a service mesh.

Logging and monitoring

Logging and monitoring are a very common use case for sidecar containers. Sidecars can operate as logging and monitoring agents that collect data from the application they are supporting, then forward that data to logging or monitoring tools as needed.

To be clear, we're not saying that sidecars should host full-blown logging and monitoring apps. Typically, you'd host those apps in a separate Pod, or possibly outside your Kubernetes cluster. However, sidecars can host the agents that connect to your logging and monitoring apps, eliminating the need to build login into your main container for interfacing with those apps.

Security and authentication

Sidecars lend themselves well to handling various security and authentication-related tasks. They can monitor network traffic and enforce firewall rules, for example, or perform decryption during authentication procedures so that the main application doesn't become bogged down with that task.

Benefits of using sidecar containers in Kubernetes

No matter which use cases you leverage sidecars for, sidecar containers can deliver a range of important benefits:

Durability

Sidecars are durable in the sense that if your main container crashes or becomes slow to respond due to an issue like buggy code, the sidecar will typically not be affected.

This benefit comes in handy if, for instance, your main container crashes and you want to log the event. If logging were handled inside the main container, the crash might cause logging to stop, depriving you of the data you need to troubleshoot the issue. But with a durable sidecar, your logs will remain intact so you can gain context on why the main container crashed.

Scalability

You can scale sidecars separately from main containers by adding or removing sidecar containers from a Pod or assigning separate resource quotas and limits for them. This is beneficial because it helps achieve an optimal balance between performance and resource consumption. If the load on your sidecars temporarily increases, you can scale them up, then scale them back down later to avoid wasting resources that aren't necessary.

Modularity

Kubernetes sidecars make deployments more modular because they break functionality into separate objects. When used in modular applications, sidecar containers help reduce the risk that a problem in one container will cause your entire Pod to fail. It also simplifies troubleshooting because it helps you trace problems to the individual container where they occurred. Last but not least, modularity allows you to update some parts of your deployments separately from others, which saves time and reduces the complexity that developers have to contend with when modifying apps.

Independence

Sidecars assist main containers, but they operate independently from them. This means that problems with the primary container won't bring your entire application down – and likewise, if you have an issue within a sidecar, such as a buggy logging process, it won't cause the main container to fail.

Put simply, sidecars and main containers work together but separately. That independence is a benefit from the perspective of overall workload stability and performance.

Security

The independence of sidecar containers is an advantage from a security perspective, too, because it means that security issues in the sidecar are less likely to impact the main application, and vice versa. In addition, if you discover a vulnerability in one of your containers, you can update it to fix the vulnerability without having to redeploy the other containers inside the Pod.

Reusability

Sidecars offer the benefit of being reusable. You can deploy the same sidecar container for multiple Pods if you need to perform the same types of complementary tasks for multiple containers. For example, if you create a sidecar that serves as a logging agent and you want to use the agent for more than one app, you can easily do so with few, if any, modifications needed.

Challenges of using sidecar containers

While sidecar containers offer many benefits, they also present some potential downsides:

Higher resource consumption and cost

The greatest challenge of sidecars is that they increase the overall resource consumption – and, by extension, the infrastructure costs – of a Kubernetes cluster. In general, you'll use fewer resources if you cram all of the functionality you need into a main container rather than offloading some of it to a sidecar.

This extra overhead and cost are often worth it if they lead to greater reliability and performance. Still, it's important to ensure that the drawbacks of sidecars don't outweigh the benefits in this respect.

Debugging challenges

In many ways, sidecars simplify debugging because they separate your application deployment into multiple components because they create a multi container Pod setup. However, sidecars can complicate debugging in the respect that they add complexity to your environment, leading to more variables that you have to consider when troubleshooting an issue.

For instance, if your application is experiencing high latency and it depends on a sidecar for load balancing, you'd need to evaluate whether the root cause is a problem with the way your sidecar is handling the load balancing itself, or with the way it's forwarding traffic to the main container.

Potential latency

Containers inside a Pod use a loopback interface to communicate over the network. Traffic usually flows quickly across the loopback, but in situations where the loopback is not properly configured, or where insufficient CPU and memory resources are available on your nodes for moving the packets, you could run into latency issues. In turn, you may face delayed communication between sidecars and main containers – an issue you would avoid if everything ran inside a single container and didn't depend on a loopback interface.

Implementing Kubernetes sidecar container

Implementing sidecar containers in Kubernetes is relatively straightforward. Here's an overview:

Step 0: Prerequisites

First, you'll need a main application container. You also need to know which functionality your application requires to run but does not handle itself. That's the functionality that you'll want the sidecar to handle.

Step 1: Create a sidecar container image

Next, create an image for a sidecar container that performs the functionality your app requires. In many cases, you can use public images for this purpose; for instance, most logging and observability tools offer container images on Docker Hub that can serve as the basis for logging and monitoring sidecars.

Step 2: Create a Pod

With your main application image and your sidecar image in place, define a Pod that runs both containers simultaneously. You'll also want to make sure to configure any networking, storage or other resource settings that the containers require to interact with each other.

For example, here's what a basic Pod containing one sidecar container might look like:

Step 3: Deploy the Pod

Finally, deploy the Pod using kubectl. As long as you configured everything properly, your sidecar will now run alongside the main container, performing whichever auxiliary functionality you designed it to handle.

Sidecar containers best practices

To get the most out of a Kubernetes sidecar, consider the following best practices:

Single responsibility principle

Sidecars work best when they are designed to do one thing and do it well. For that reason, strive to assign just one task to each sidecar. If your application requires multiple types of auxiliary functionality – for example, if you need to perform both logging and load balancing – create separate sidecars for each task.

Create small and modular apps

Along similar lines, your applications themselves should be designed to handle a specific responsibility. If you try to pack too much functionality into a single app, it becomes harder to deploy the necessary sidecars to support it, not to mention to manage and troubleshoot the app itself.

In general, small and modular apps, complemented by small and modular sidecars, make for the best Kubernetes deployments.

Set resource limits and monitor sidecar containers

Just like any other type of container, sidecar containers can become prone to excess resource consumption or poor performance. Safeguard your deployments against these risks by configuring resource limits (to prevent sidecars from consuming so many resources that they undercut the performance of other containers) and monitoring your sidecars to detect performance issues.

Enforce security and permissions limits

Sidecar containers shouldn't have unfettered access to main containers. Instead, when you configure your Pod, be sure to allow your various containers to interact with each other only to the extent necessary to perform their required tasks.

Have a reason for using sidecars

Using sidecars is generally a best practice in Kubernetes, but not every app requires one. If you have a very simple, stateless application, sidecars may be overkill – and creating them will needlessly increase the overhead and complexity of your environment. So, before deciding to deploy sidecars just because they're beneficial in general, make sure they are beneficial for the specific app you are working with.

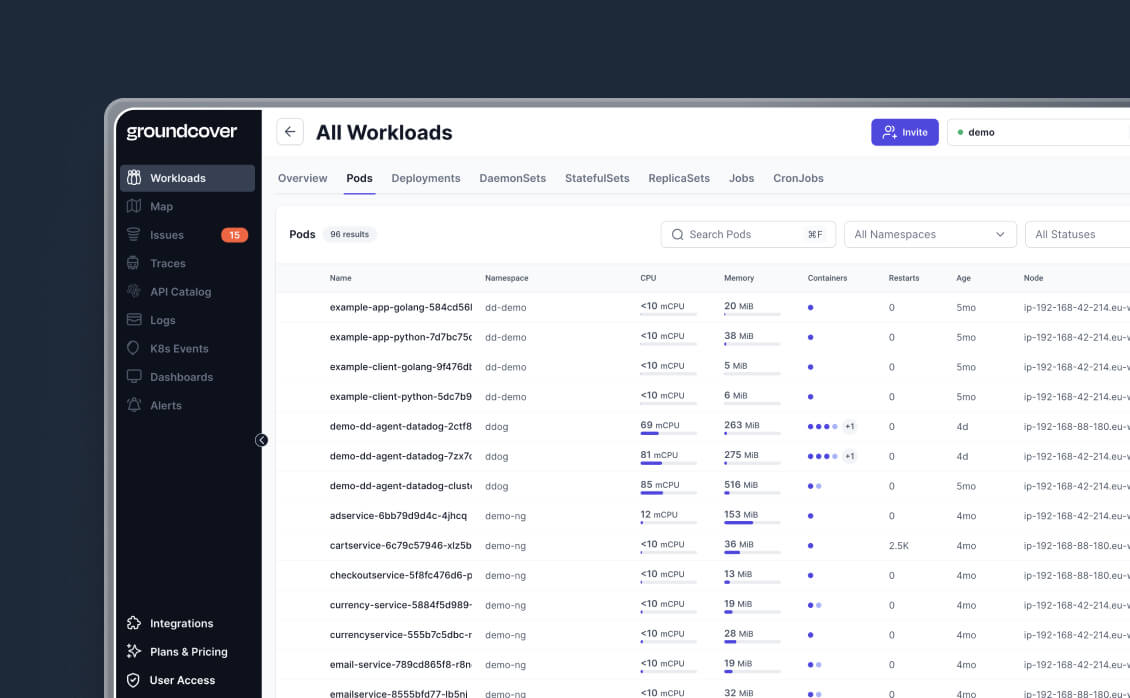

How groundcover can help

No matter how many sidecars you deploy or what they do, groundcover makes it easy to keep tabs on all of them – as well as on your main application containers – and ensure that everything runs smoothly.

In addition, because groundcover can collect monitoring data from workloads using eBPF – a more efficient and simpler approach than relying on sidecars – groundcover can reduce the total number of sidecars that you have to deploy in the first place, while giving you even more visibility than you'd gain from traditional sidecars.

Configure with care

In many cases, a Kubernetes sidecar is just as important as a main application container in helping to achieve optimal performance, while also simplifying development and management. But sidecars don't optimize or manage themselves, which is why it's important to take the right steps when configuring sidecars, as well as to monitor them on an ongoing basis so that sidecar containers don't become the weakest link in your application deployment.

Sign up for Updates

Keep up with all things cloud-native observability.

.webp)