Kafka Logging: Strategies, Tools & Techniques for Log Analysis

Explore Kafka log management. Optimize Kafka partitions for efficient logging, reduce resource usage in clusters, and ensure data accessibility.

If Franz Kafka, the author famous for works about absurdly incomprehensible processes and personalities, wrote about managing logs for Kafka the open source data streaming tool, his take on Kafka monitoring would probably be pretty complex. It would involve lots of arcane tools that integrated poorly with each other, leaving admins scratching their heads about how to keep track of the data produced by their Kafka clusters.

Fortunately, Kafka log management in the real world isn't so Kafkaesque. It does require an understanding of Kafka's various components – including topics, partitions and offsets – but once you get a handle on those, Kafka logging turns out to be quite a bit simpler than, say, the experience of Josef K. in The Trial.

To prove the point, this article walks through the ins and outs of Kafka log management. It builds on our previous Kafka consumer best practices post, which explains the essentials of what Kafka does and how its various components work. Today, we'll dive deeper into Kafka log management by explaining how to optimize Kafka partitions to streamline the efficiency of Kafka logging.

To be clear, we're talking here about optimizing the Kafka log structure, not about working with the log data printed by Kafka. That's a separate topic, and we'd love to tell you about it, but it will have to wait for another time.

How Kafka topics and partitions work

By way of background, let's go over the basics of what Kafka topics and partitions are and how they work.

A topic in Kafka is a category of messages that you can use to organize your data streams. In other words, topics make it possible to separate streaming data into different categories, which makes it easier to ensure that you send the right types of data to the right destinations.

Partitions allow you to break Kafka topics into even smaller components, then distribute them across multiple brokers (a broker is a server within a Kafka cluster that sends and receives data). Partitions are a big deal because they help you distribute the work of processing topics across multiple brokers, which makes it possible to take full advantage of Kafka's distributed architecture. Without partitions, each topic would have to be handled on just one broker processed by a single consumer, increasing the risk of performance problems or outright unavailability in the event that the broker runs out of resources or crashes.

How are Kafka partitions stored?

The data contained in partitions is streamed in real time. But that doesn't mean it disappears as soon as Kafka finishes delivering it.

On the contrary, Kafka retains partitions for a while. It does so by dividing partitions into segments, each of which represents a part of the partition between two offset points. Every segment contains an archived set of data (except the last segment, which remains the active, non-archived part of the partition until it’s replaced by a more recent segment). The default segment size is one gigabyte or one week's worth of data – whichever is smaller.

In addition to dividing partitions into segments, Kafka stores indexes that help to resolve offsets and timestamps quickly during seeking operations.

Kafka log management and monitoring

Now that you know how Kafka topics, partitions and segments work, let’s walk through common Kafka log management and monitoring tasks one-by-one.

Log retention

Kafka topics can become quite massive even if you don't replicate partitions – and if you do, they are even more massive. Log retention is one way of reducing the overall storage that your topics consume.

You can configure the Log Retention property in Kafka to set the maximum amount of time Kafka will retain a message. Once a segment has been around longer than the time you set, the segment will be subject to deletion upon the next cycle.

You can define Log Retention using the format:

log.retention.hours

Minutes and milliseconds are also supported.

Kafka also allows you to set a Log Retention policy based on maximum size using the format:

log.retention.bytes

The size is applied to partitions, not segments, so be sure to configure the setting appropriately if you choose this approach.

Log cleanup: Deletion and compaction

You can configure Kafka to do one of two things when it's time to rotate a log:

• Delete it, which means, of course, removing it permanently and forever.

• Compact it, in which case the last message of each segment that shares the compaction key will be retained, while all previous instances are deleted. Thus, if you set the Kafka offset to 0 or replace the consumer group, you’ll get all the messages that match the most recent version of that compaction key.

To tell Kafka whether to delete or compact logs during log rotation, use the setting:

log.cleanup.policy=delete

Obviously, you'll want to replace delete with compact if you want to compact the logs.

Kafka monitoring

In addition to managing the data stored in topics and partitions, it's wise to monitor topics and partitions to ensure that Kafka is handling them efficiently, that you're not losing data for some reason and so on.

Since Kafka is a Java application that uses a Java runtime, we can leverage the Java Management Extensions (JMX) exporter to fetch JVM metrics and other relevant metrics from brokers, producers and consumers to get insight into what's happening with topics and partitions.

Examples of the data you can pull using JXM include:

• CPU usage

• JVM memory used

• Time spent on garbage collection

• Message in per topic

• Bytes in per topic

• Bytes out per topic

Some of this data is useful for monitoring overall Kafka performance and getting context on situations where topics or partitions are not being streamed in the way they should. Other data provides specific insight into how rapidly Kafka is adding messages to topics, which allows you to monitor topic performance over a period of time.

To work with the data efficiently, you can export JMX metrics to Prometheus and visualize them with Grafana – or, you know, with any other monitoring, alerting and data visualization solutions of your choosing.

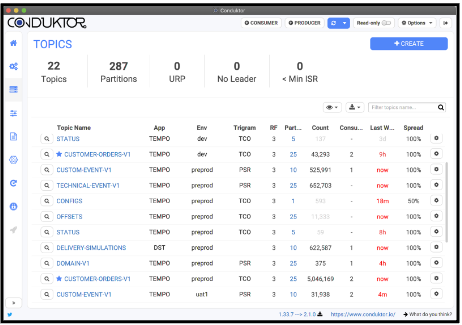

In addition, Conduktor is a powerful tool for monitoring, debugging, log visualization and troubleshooting on Kafka clusters. One of the features that helps troubleshoot issues related to specific topics, partitions and consumer groups is the temporary consumer function, which allows you to pull messages from a specific topic and visualize its content.

Kafka UI is another powerful open-source tool that allows you to visualize and inspect Kafka brokers, consumers, topics, configurations and more.

We could go on – there are other great Kafka monitoring tools out there – but suffice it to say that whichever solution you choose, your goal should be to ensure that you can effectively drill down into Kafka monitoring data in ways that allow you to figure out exactly what's happening with your topics, partitions and other core Kafka data components.

Best practices for Kafka log management

Now that the basics of log retention and log rotation are out of the way, let's talk about some practices that can help you work with logs as effectively as possible in Kafka.

Understand why you use Kafka – and manage logs accordingly

For starters, it's critical to align your log management strategy with your overall Kafka strategy.

You can use Kafka as part of many applications, and each use case is likely to have different requirements when it comes to log management. One app might be subject to regulatory mandates that require you to retain data for a specific period of time, for example, whereas in other situations log retention is optional. Or, you may be running Kafka on a system where storage space is limited or expensive, which creates an incentive to reduce overall log size.

The point here is that you should assess what you're using Kafka for, then figure out how much data you need to retain, and for how long, based on your use case. There is no such thing as a one-size-fits-all Kafka log retention or rotation strategy.

Leverage compaction

Compaction can dramatically improve the efficiency of disk usage in Kafka, especially if you define a clear and accurate compaction key (such as UID + Property Name) that holds the last version of the field instead of keeping all the changes that preceded it.

Here again, though, it's important to keep in mind that your ideal configuration will vary depending on your use case. Although in general compaction is a great way to make Kafka more efficient, in some scenarios you need to keep all versions of your data, making compaction infeasible.

Compress messages

Message Compression is another great way to boost performance and efficiency in Kafka. By reducing the size of each message produced, compression can reduce latency, increase throughput and optimize disk usage.

You can configure compression by setting the compression.type property. Kafka supports the following options for this property:

• None.

• Gzip.

• Lz4.

• Snappy.

• Zstd.

Each of these options corresponds to a different compression algorithm.

In addition, you can configure whether compression takes place on the producer or the broker by setting the compression.type property to either producer or broker. If the topic compression type matches the producer compression type, it will prevent the broker from decompressing and compressing again using its compression type.

Backup data to cold storage

If you need to retain Kafka data for a relatively long period of time but you don't want to exhaust the storage resources of your cluster with old data, consider backing up the log files and moving them to an external archival storage location.

You can always recover the logs from there if you need them, but you won't be tying up the storage resources of your cluster on log data that you’re not likely to have to access frequently.

Partition intelligently

Effective partitioning is paramount to efficient Kafka log management. You should choose the right number of total partitions, since total partition count determines how many consumers can consume a topic, which in turn influences the performance of your cluster.

To decide how many partitions to set up, analyze the amount of traffic you expect to need to process and the computation work required to consume and process the messages. Then, determine the optimal number of consumers that you want to have running during periods of high load and scale down if necessary.

It's also a best practice to choose numbers that are divisible by 2 and 3 when deciding how many consumers to create. That way, each consumer will receive the same number of partitions, increasing consistency and efficiency.

Get excited about Kafka logging!

If you've read this far, you know that Kafka logging is a somewhat complicated topic – although, again, it's quite a bit less complicated than the typical Kafka novel plot. The data from Kafka streams is broken down into different segments, and depending on what you're using the log data for, your priorities for log retention and rotation could vary widely.

Nonetheless, figuring out how best to manage Kafka log data is important if you want to optimize the performance (not to mention the infrastructure costs) of your clusters by using resources efficiently. That's why it's well worth setting aside some time to think strategically about which log management rules to configure, rather than settling for the default settings.

You should also deploy Kafka monitoring, visualization and debugging tools to ensure that you're able to collect and interpret the right information to help troubleshoot issues related to log management.

We know – log management isn't exactly the most exciting aspect of Kafka in most people’s eyes. But it's an essential task if you want to eliminate unnecessary resource usage within your clusters, while also ensuring that you have access to your data for as long (or as short) a period of time as you need.

Sign up for Updates

Keep up with all things cloud-native observability.

.webp)